Artificial Intelligence (AI) poses risks of considerable concern to academics, auditors, policymakers, AI companies, and the public. An AI Risk Repository serves as a common frame of reference, comprising a database of 777 risks extracted from 43 taxonomies. This database can be filtered based on two overarching taxonomies. The AI Risk Repository is the first attempt to curate, analyze, and extract AI risk frameworks into a publicly accessible, comprehensive, extensible, and categorized risk database.

Download: MIT AI Risk Repository_ December 2024 update MIT AI Risk Repository_ December 2024 update.pdf2 MB.a{fill:none;stroke:currentColor;stroke-linecap:round;stroke-linejoin:round;stroke-width:1.5px;}download-circle Using the AI Risk Repository

The AI Risk Repository can be used to:

- Provide an accessible overview of threats from AI- Provide a regularly updated source of information about new risks and research- Create a common frame of reference for researchers, developers, businesses, evaluators, auditors, policymakers, and regulators- Help develop research, curricula, audits, and policy- Help easily find relevant risks and research

AI Risk Database

The AI Risk Database links each risk to the source information (paper title, authors), supporting evidence (quotes, page numbers), and to Causal and Domain Taxonomies. It is available on Google Sheets or OneDrive.

Download: airisksmit airisksmit.pdf2 MB.a{fill:none;stroke:currentColor;stroke-linecap:round;stroke-linejoin:round;stroke-width:1.5px;}download-circle Taxonomies of AI Risks

The AI Risk Repository uses two taxonomies of AI risk:

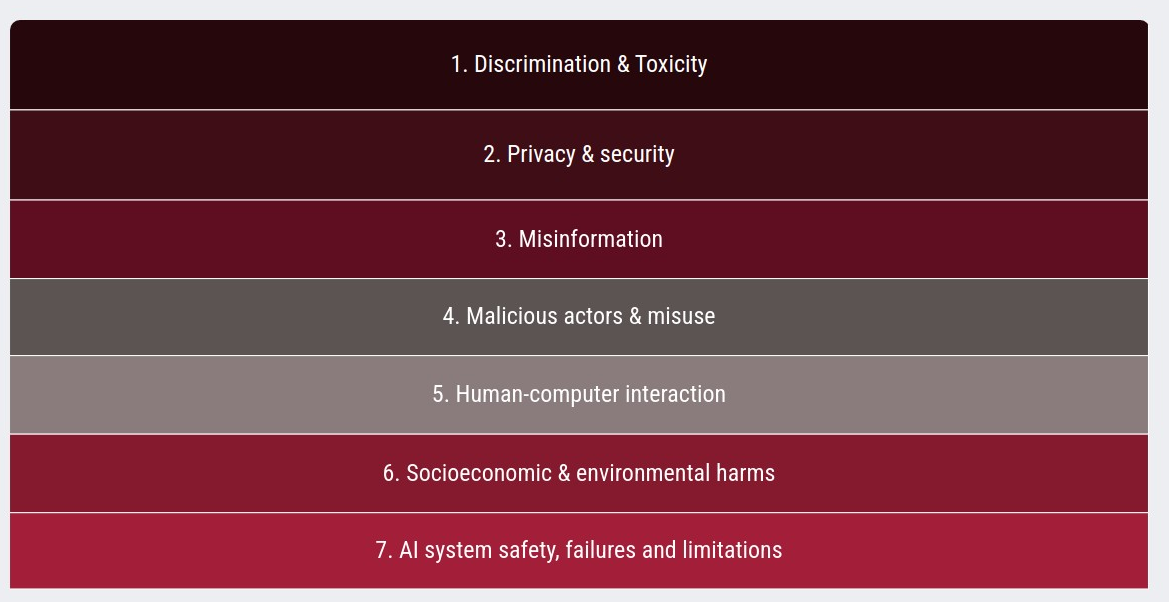

- Causal Taxonomy of AI Risks: Classifies each risk by its causal factors:Entity: Human, AI- Intentionality: Intentional, Unintentional- Timing: Pre-deployment, Post-deployment Domain Taxonomy of AI Risks: Classifies risks into seven AI risk domains:

- Discrimination & toxicity- Privacy & security- Misinformation- Malicious actors & misuse- Human-computer interaction- Socioeconomic & environmental- AI system safety, failures, & limitations- These are further divided into 23 subdomains

The Causal and Domain Taxonomies can be used separately or together to filter the database to identify specific risks. For example, a user could filter for Discrimination & toxicity risks and use the causal filter to identify the intentional and unintentional variations of this risk from different sources. Similarly, they could differentiate between sources which examine Discrimination & toxicity risks where AI is trained on toxic content pre-deployment, and those which examine where AI inadvertently causes harm post-deployment by showing toxic content.

Download: ucberkelyrisksofai ucberkelyrisksofai.pdf1 MB.a{fill:none;stroke:currentColor;stroke-linecap:round;stroke-linejoin:round;stroke-width:1.5px;}download-circle Methodology

The AI Risk Repository was created using a systematic review of taxonomies and other structured classifications of AI risk followed by expert consultation. The creators developed taxonomies of AI risk using a best-fit framework synthesis.

The methodology included:

- A systematic search strategy- Forwards and backwards searching- Expert consultation to identify AI risk classifications, frameworks, and taxonomies

Findings

The systematic literature search retrieved 17,288 unique articles from searches and expert consultations. After screening, 43 articles and reports met the eligibility criteria. Most of the papers did not explicitly describe their methodology in any detail.

Applications of the AI Risk Repository

The AI Risk Repository can be used for:

- Onboarding new people to the field of AI risks- Providing a foundation to build on for complex projects- Informing the development of narrower or more specific taxonomies- Using the taxonomy for prioritization, synthesis, or comparison- Identifying underrepresented areas

Specific applications include:

- Policymakers: Regulation and shared standard development- Auditors: Developing AI system audits and standards- Academics: Identifying research gaps and develop education and training- Industry: Internally evaluating and preparing for risks, and developing related strategy, education, and training

Operationalizing Risk Thresholds

Risk can be defined in terms of likelihood (probability of an event or negative outcome), and severity of harm (magnitude of impact). A confidence interval overlapping with the intolerable risk threshold should trigger the need for more work with a larger sample size to reduce the uncertainty range and give a better assessment of whether the effect is actually below the intolerable risk threshold.

Intolerable Risk Thresholds

This paper provides a background on intolerable risk thresholds and proposes a number of recommendations and considerations for developers, third-party organizations, and governments that are exploring how to define and operationalize intolerable risk thresholds.

Selected Risk Categories:

- Chemical, Biological, Radiological, and Nuclear (CBRN) Weapons- Cyber Attacks- Model Autonomy (loss of human oversight)- Persuasion and Manipulation- Deception- Toxicity (including CSAM, NCII)- Discrimination- Socioeconomic Disruption

Other crucial ways to identify risks beyond those explored in existing literature is through extensive open-ended red reaming activities, as well as other risk estimation techniques.

Limitations

The AI Risk Repository includes 43 documents identified through a comprehensive search. While the methodology was designed to capture the most comprehensive and widely recognized risk taxonomies in the field, it is possible that some emerging risks or documents were missed. The quality of the AI Risk Database is dependent on the documents reviewed. Most do not explicitly define “risk,” and most do not systematically review existing research literature when developing their taxonomies or describe their classification process.

All risks in the AI Risk Database were extracted by a single expert reviewer and author, and all risks were coded against the taxonomies by another single reviewer and author. Although a structured process for extraction and coding was followed, this introduces the potential for errors and subjective bias.

Conclusion

The AI Risk Repository provides a comprehensive and accessible resource for understanding and addressing the risks associated with AI. It is a common foundation for constructive engagement and critique and a starting point for a common frame of reference to understand and address risks from AI.