In February 2023, the military made a significant stride by openly engaging in discussions about the integration of AI within their military operations. As the year progressed, in July 2023, the military took an even bolder step by announcing their intention to provide AI systems with access to highly classified information pertaining to military operations. This marked a pivotal moment in the military’s embrace of cutting-edge technology.

By November 2023, the military found themselves at the forefront of discussions once again, this time contemplating the potential for AI to play a role in the determination of life-and-death decisions involving human targets. Additionally, they explored the possibility of sharing their advanced AI technology with the esteemed Five Eyes alliance, further solidifying their commitment to innovation and international cooperation in the realm of military technology.

0:00

/0:09

1×

Download: FiveAIs_GALLWI_198_xml_17_ FiveAIs_GALLWI_198_xml_17_.pdf53 KB.a{fill:none;stroke:currentColor;stroke-linecap:round;stroke-linejoin:round;stroke-width:1.5px;}download-circleIn a significant move to shape the future of warfare, the United States has launched an initiative aimed at promoting international cooperation on the responsible use of artificial intelligence (AI) and autonomous weapons in military operations. This initiative, led by Bonnie Jenkins, the State Department’s under secretary for arms control and international security, emphasizes the creation of strong norms for the military use of AI, acknowledging the rapid technological changes and their potential impact on warfare.

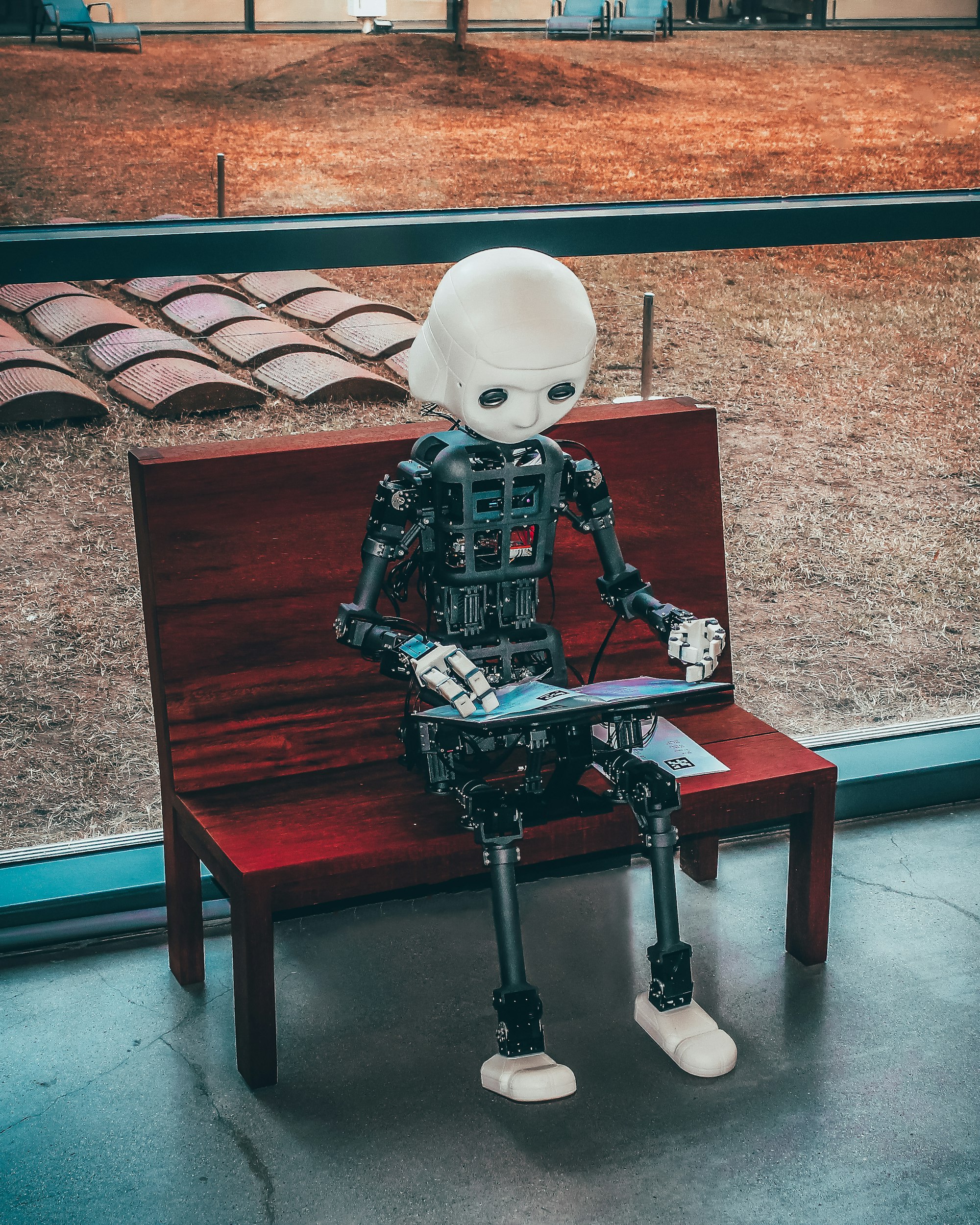

The initiative gained momentum at a two-day conference in The Hague, where the urgency of addressing advances in drone technology and the prospect of fully autonomous fighting robots was a key topic of discussion. The U.S. declaration includes 12 points, ensuring that the military use of AI aligns with international law and emphasizes maintaining human control and involvement in critical decisions, especially concerning nuclear weapons.

A call for action was issued at the conference, joined by 60 nations including the U.S. and China, urging cooperation in the development and responsible use of AI in military contexts. This global effort aims to mitigate risks associated with AI, preventing it from spiraling out of control and leading humanity into undesirable consequences. The call to action highlights the importance of safeguards and human oversight in AI systems, acknowledging the limitations and constraints faced by humans in the rapidly advancing field of AI.

This U.S.-led initiative represents a pivotal step in addressing the challenges and ethical concerns posed by AI and autonomous weapons in military operations. By fostering international cooperation and establishing responsible guidelines, the U.S. is steering the conversation towards a more controlled and ethical use of AI in warfare.